Your agents are currently acting as "trusted proxies" for untrusted actors, bypassing Zero Trust by inheriting the privileges of the developer who spun them up. If you haven't decoupled your agent's identity from the human who created it, you've built a high-speed privilege escalation engine.

The Deep Dive

The Identity Proxy Trap: Why Your Agents Are a Security Liability

Most engineering teams treat agents as "just another service account," a fundamental misunderstanding of the agentic lifecycle. Unlike a static microservice, an agent is dynamic; its "intent" is generated at runtime by a Large Language Model (LLM). Treating an unverified LLM-generated prompt with the full blast radius of a developer’s identity is a recipe for disaster.

The OWASP GenAI Security Project recently flagged "Identity Hijacking" and "Indirect Prompt Injection" as top risks for agentic systems. In a typical scenario, an agent might be tasked with summarizing a customer's email via a RAG pipeline. If that email contains a hidden prompt instructing the agent to delete files, the agent will comply if it inherits broad "Full Access" permissions.

The agent acts as a trusted proxy, but the data it consumes remains untrusted. Without a distinct identity, the system cannot distinguish between a legitimate developer command and an external injection attack. Shadow AI isn't just about unapproved software; it’s about unmanaged identities performing autonomous actions without a cryptographic audit trail.

Become An AI Expert In Just 5 Minutes

If you’re a decision maker at your company, you need to be on the bleeding edge of, well, everything. But before you go signing up for seminars, conferences, lunch ‘n learns, and all that jazz, just know there’s a far better (and simpler) way: Subscribing to The Deep View.

This daily newsletter condenses everything you need to know about the latest and greatest AI developments into a 5-minute read. Squeeze it into your morning coffee break and before you know it, you’ll be an expert too.

Subscribe right here. It’s totally free, wildly informative, and trusted by 600,000+ readers at Google, Meta, Microsoft, and beyond.

The MCP Gap: Why Traditional IAM Fails Stateless Agents

The industry is rapidly gravitating toward the **Model Context Protocol (MCP)** for connecting LLMs to data sources like Google Drive or internal databases. While MCP provides excellent interoperability, it is often implemented in a way that ignores session-level intent. Traditional IAM systems, built on stateful sessions and long-lived tokens, cannot handle the granular, per-tool-call verification required by an autonomous agent.

Standard OAuth scopes are too coarse for this environment. An agent might need to read a thousand Slack messages but should never be allowed to delete one. Current IAM architectures focus on the 'Who' and the 'What,' but they completely ignore the 'Why'—the agent’s intent derived from the LLM's reasoning trace.

We are seeing a shift toward the "Agentic Contract Model," where the relationship between the agent and the resource is governed by a cryptographic contract. However, most CTOs are still stuck in the "API Key" mindset. Relying on shared secrets for autonomous workflows is a 2010 solution for a 2025 problem that creates a single point of failure.

Agentic Identity Management (AIM) and Agent Cards

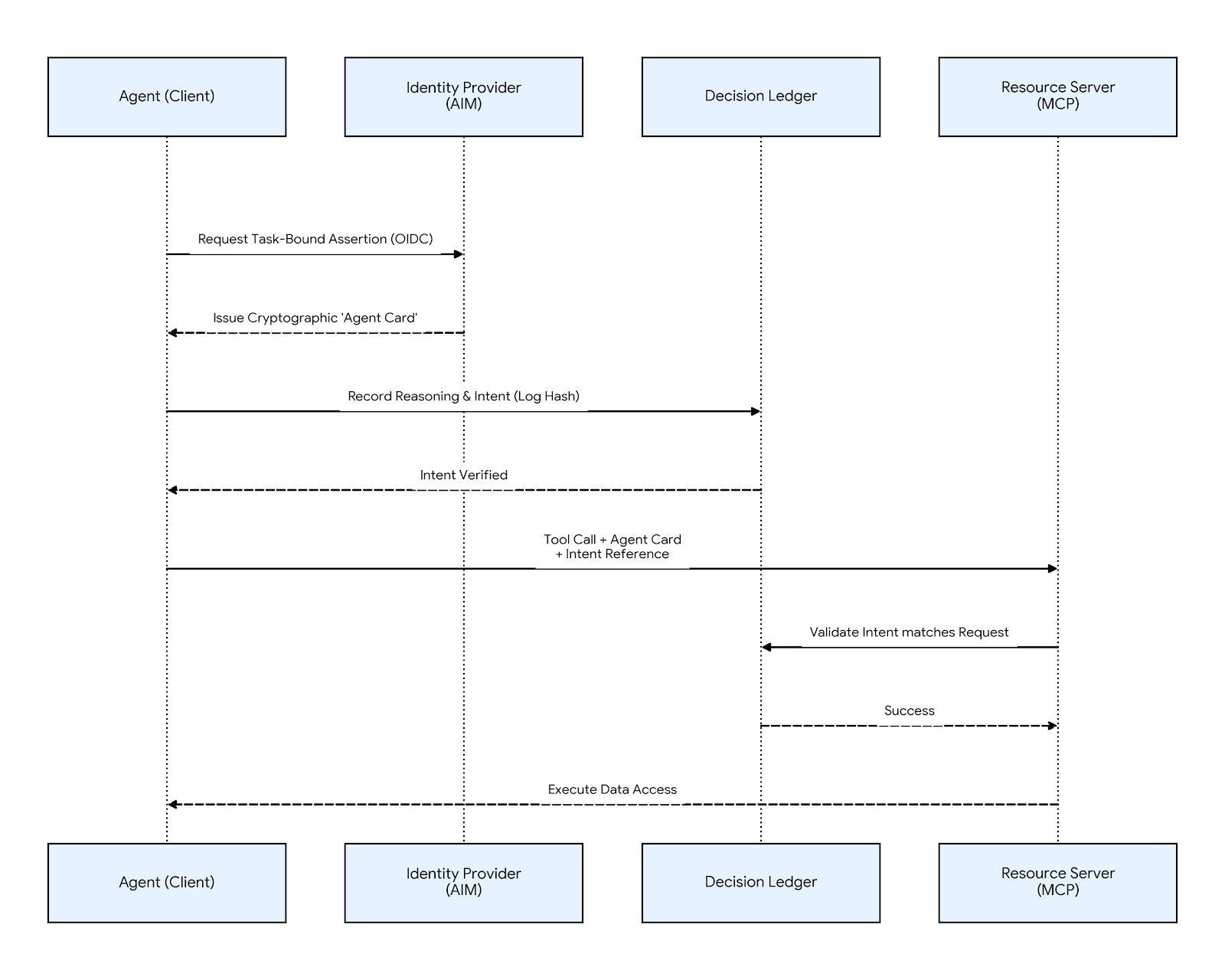

To solve the shadow identity crisis, you must move toward Agentic Identity Management (AIM). The core of AIM is the Agent Card —a metadata-rich, cryptographically signed identity that travels with every agentic request. By requiring agents to present an Agent Card, you move from 'Identity-based access' to 'Context-bound authorization.'

This shift allows the system prompt hash to act as a versioned blueprint for behavior. Unlike a static JWT, an Agent Card contains context: the Agent ID, the Model Version, and the "Parent Identity" of the human user. This ensures every autonomous action is tied back to a specific model configuration and a verified human sponsor.

Implementing AIM also requires the deployment of Decision Ledgers. A Decision Ledger is an immutable log where the agent must record its "reasoning" or intent before a transaction is executed via a stateless protocol like MCP. This creates a 'Double-Entry' bookkeeping system: one entry for the LLM's intent, and one for the tool's execution.

Finally, you must implement Cryptographic Role Assertions using ephemeral credentials. The agent must prove it is authorized to hold a specific role for a limited duration or task. Task-bound assertions ensure that even if an agent is compromised via prompt injection, the blast radius is limited to the specific window defined in the assertion.

From the Trenches

The Tactic: Stop using long-lived service account keys for agents. Instead, implement a Manifest-based Agent Identity using a JSON structure signed with an Ed25519 key.

The Code (Example Agent Card Manifest)

{

"header": { "alg": "EdDSA", "typ": "AgentCard" },

"payload": {

"agent_id": "procurement-agent-04",

"version": "2.1.0",

"provider_meta": {

"model": "anthropic-claude-3-5-sonnet",

"system_prompt_hash": "sha256:e3b0c44298fc1c149afbf4c8996fb9..."

},

"context": {

"parent_user": "urn:okta:user:00u123456789",

"session_id": "sess_abc123",

"intent_ledger_ref": "https://ledger.corp/logs/p-04/tx-789"

},

"exp": 1735689600

},

"signature": "S6bYyWp4E_Z7..."

}

The Governance Check

The Risk: Agent Hijacking via Token Theft. If an agent stores a human's OAuth token in its context window (RAG), that token can be leaked via prompt injection.

The Fix: Use Token Exchange (RFC 8693). The agent should never handle the human's primary token. Use your identity provider to exchange the human token for a short-lived "Agent Token" with a scope limited strictly to the tools required for the current session.

—

Need to secure your stack? I have 2 slots left for January for a Strategic AI Architecture Review. Reply "AUDIT" to secure your spot.